What is a codec and why do you need one?

A codec is a tool for compressing visual information in a video file to make it easier to send and store. Each codec includes two parts, an encoder to compress video and a decoder to translate the video back into a viewable form. In fact, the word codec stands for COmpressor-DECompressor.

Video creators and hosting and streaming platforms use codecs to keep file sizes manageable. Think about it: If we had to define the color of every pixel in a video, the file size would be enormous—nearly 4 gigs per minute for a 720p video.

Since massive file sizes like this would crash most websites and slow streaming to a trickle, computer scientists have devised codecs to reduce file sizes.

Here are some common codecs:

- H.264 (AVC)

- H.265 (HEVC)

- Animation

- ProRes

- Sorenson (older)

Codecs vs Video Formats

Some people confuse codecs and video formats. It’s an easy mistake to make since video container formats do contain codecs. If you think of a video as a letter, the codec is the language the letter is written in, the video itself is the meaning or message being conveyed, and the file format is the envelope that encloses both of those.

Video formats are represented by the file extension. You might be familiar with formats like:

- Quicktime (.mov)

- MP4 (.mp4)

- Window Media Video (.wmv)

Different codecs can be used with each of these file formats depending on which part of the video making and distribution processes you’re in.

What Is The Best Codec To Use?

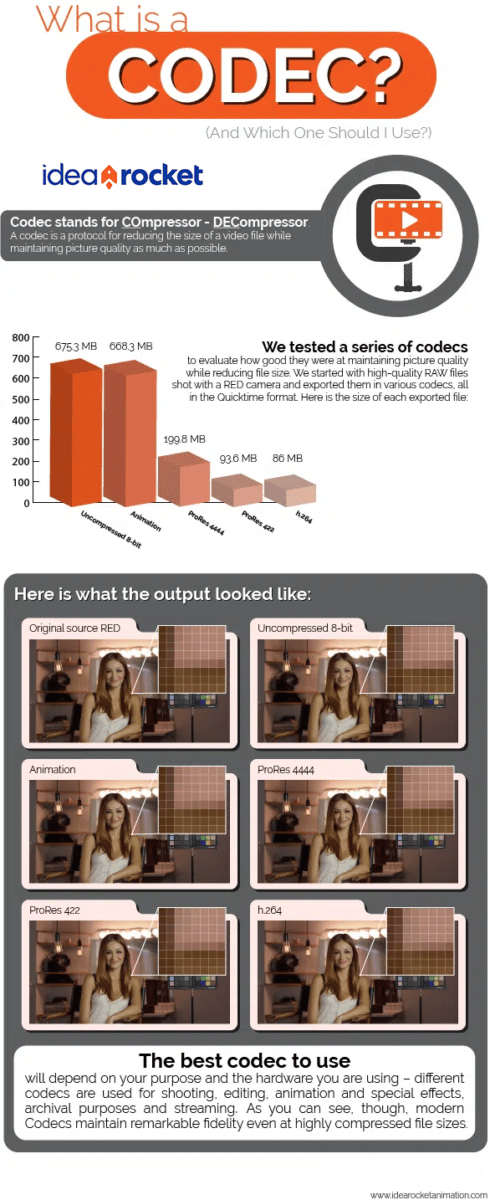

The answer to this question will depend on what your purpose is. Different codecs are used at different places in the video-making/distributing process. In the infographic below, we are testing these codecs:

- Shooting (RAW)

- Editing (Pro Res 4:2:2 and Uncompressed 10-bit)

- Animation and Special Effects (Animation)

- Mastering and archiving (Pro Res 4:4:4)

- Streaming or Distributing (H.264)

As we talk about some of these codecs, keep in mind that codecs can be classified as either lossless or lossy. As the names imply, lossless codecs keep all of the information so they cause no loss of quality. Lossy codecs, on the other hand, discard unnecessary information resulting in some loss of quality. The trade off is that you get a much smaller file.

Codecs Worth Knowing About

- Animation: This is a lossless codec. The only sort of compression it uses is Run-Length Encoding. (More on that in a bit.) It is a codec that is usually only used in 2d or 3d animation production, or in special effects. Since it depends on continuous areas of color for its compression power, it is not very reductive of file size when used for live action.

- H.264: This lossy codec was revolutionary when it came out in 2003. The dominant codec before then was Sorenson, and H.264 improved both picture quality and compression immensely, fueling an explosion of video content. Most of the video you see online still uses this codec. We usually deliver the explainer videos we make using .mp4 and H.264. The quality is so high that it is even accepted for many broadcast deliveries!

- H.265: A newer, more advanced lossy codec, H.265 or HEVC, offers higher compression rates than H.264. Differences in the way data is compressed mean that H.265 requires half as much bandwidth as its predecessor, but you’ll also need more powerful hardware for compression.

- ProRes: This is a lossy Apple-branded codec that was built to use in Final Cut Pro, but has stuck around after FCP was sidelined. Video creators with an iPhone 13 or iPhone 14 can still choose to record and edit in ProRes. It comes in a great many different flavors for different applications like editing (4:2:2) or mastering/archiving (4:4:4).

- Uncompressed 8-bit or 10-bit: This is one of video’s great misnomers. Uncompressed video is actually compressed using Chrome Sub-Sampling! We’ll explain what that means below. It is a codec used for editing.

- AV1: The new lossy codec on the block. Many experts predict that AV1 will become the next dominant codec for internet video. It delivers equal or higher quality video to HEVC but does so at a lower bit rate. This makes streaming of 4K and even 8K content more efficient. It’s also open source, royalty free, and supported by platforms like YouTube, Amazon Prime, Twitch, Netflix and Meta (Facebook).

So what is the best codec to use? Go for the one recommended for your hardware or identified in the tech specs of your video host.

What Is A Codec Bit Rate?

We’ve mentioned bit rate a few times, so before we get into the technical details of how codecs actually work, let’s define bit rate. Bit rate is the measure of how much a codec has compressed a video clip. It’s usually expressed as data amount per second. A Codec that outputs at 8 Mbps would give us a file that is 8 megabits large for every second of video.

A mistake that many people make is to confuse bit rate with quality. Some codecs can produce excellent images at a bit rate that would produce a chunky mess in older, inferior codecs.

If you are rendering out a video, your chosen codec will often ask you at what bit rate you would like to output. Keep in mind that codecs usually have a sweet range where they are producing the best images at the smallest file sizes. Larger bit rates usually produce only very marginal improvement, while smaller bit rates can quickly deteriorate the image. Experimentation (or just experience) will help you find a codec’s sweet range.

How Do Codecs Work?

You know what they are and how they’re used. You’ve even gotten to know some of the major players in the codec world. But there’s still one big question we haven’t answered. How do codecs actually work? The answer is: it depends. Various codecs use different techniques to reduce file size.

Common compression techniques include:

- Run-Length Encoding

- Bit Depth

- Chroma Sub-sampling

- Spatial Compression, and

- Temporal Compression

Run-Length Encoding: Say you have a big patch of your video image that is the same color. Instead of repeating color information for every pixel in the frame, you can specify that a range of pixels are all the same color. For example: “this pixel is x color, and the following 35 pixels are the same color too.” This is a lossless method.

Bit Depth: Usually in video we use 8 bits (or 8 binary numbers) to define a color. That results in 256 gradations of color, which usually gives us a pretty good image, but if we want more definition we can apply 10 bits to the color of each pixel (1,024 gradations.) This is what the 10-bit Uncompressed video does, and it actually makes the file a bit bigger. Likewise, we can reduce file size by communicating color information with fewer binary slots than 8. The tell-tale sign that a file is being compressed this way is that we start to see banding on the gradated areas of the image.

Chroma Sub-sampling: Our eyes are designed to be very sensitive to brightness, but not that sensitive to color. Codecs can take advantage of this human characteristic by keeping brightness information but throwing away some color information.

Rather than expressing a color value with three numbers representing colors (Red, Green, Blue,) we can express a color value as Y’CbCr (Brightness, Blue, Red) and derive the missing color (green) by math, since brightness is just a combination of the three primaries. This lets us make the color information chunkier – one pixel of information may represent two or four pixels in the image. However, the variations in brightness mean the eye has a hard time spotting the difference.

In a 4:2:2 codec, such as ProRes 422, every pixel has brightness information, but out of every four pixels we only have two pixels of color information in the blue and red channels. This process can reduce file sizes considerably and be barely perceptible.

Spatial compression: We have talked about how Run-Length Encoding can bunch together the same color and indicate that it is being repeated. Spatial Compression is like that, but it fudges a little bit. If two colors are similar, then it reproduces them as being the same. This can cause the same sort of banding that you see in low bit-depth images.

Temporal compression: Imagine a talking heads shot. On camera, we have a person talking and moving around a bit, but if the camera is on a tripod, the background isn’t moving. Why repeat the image information for the background areas if you can say “let’s repeat these patches of the image that don’t move for the next 50 frames.” When a Codec does this, it is applying Temporal Compression.

Many of the most effective codecs use a combination of the common methods listed above or add in extra methods we haven’t discussed.

If you’re interested in producing a video, reach out to the video experts at IdeaRocket. We make healthcare videos, HR videos and more.